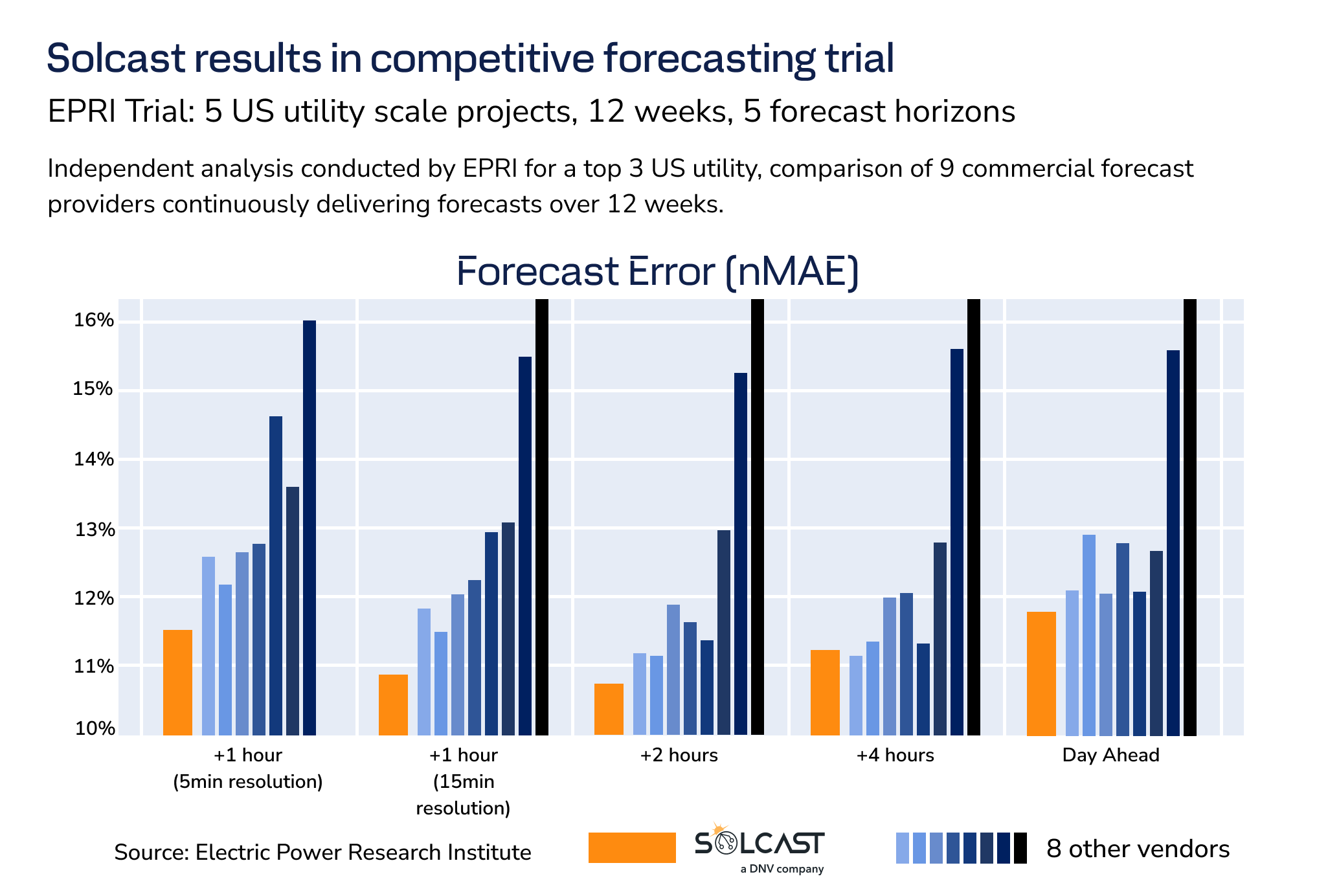

You may have heard the recent news that Solcast achieved the lowest error overall amongst 9 vendors participating in a major competitive forecast accuracy trial.

The trial, conducted by independent US nonprofit Electric Power Research Institute (EPRI), is the world’s most comprehensive, open, solar production forecasting trial of the past five years. The trial was run in partnership with a top-3 US utility, spanning 12 weeks, 5 large solar plants, and 5 different time horizons from hour ahead to day ahead. Read more about the trial in our recent blog article.

Inside Solcast: Living the trial

The Solcast team is driven to be the best, to create the data to enable the next steps of the energy transition. We pride ourselves on the robustness and quality of the data we produce. After seven years of relentlessly making improvements, it’s exciting for us when we get the chance to compete in an evaluation or trial.

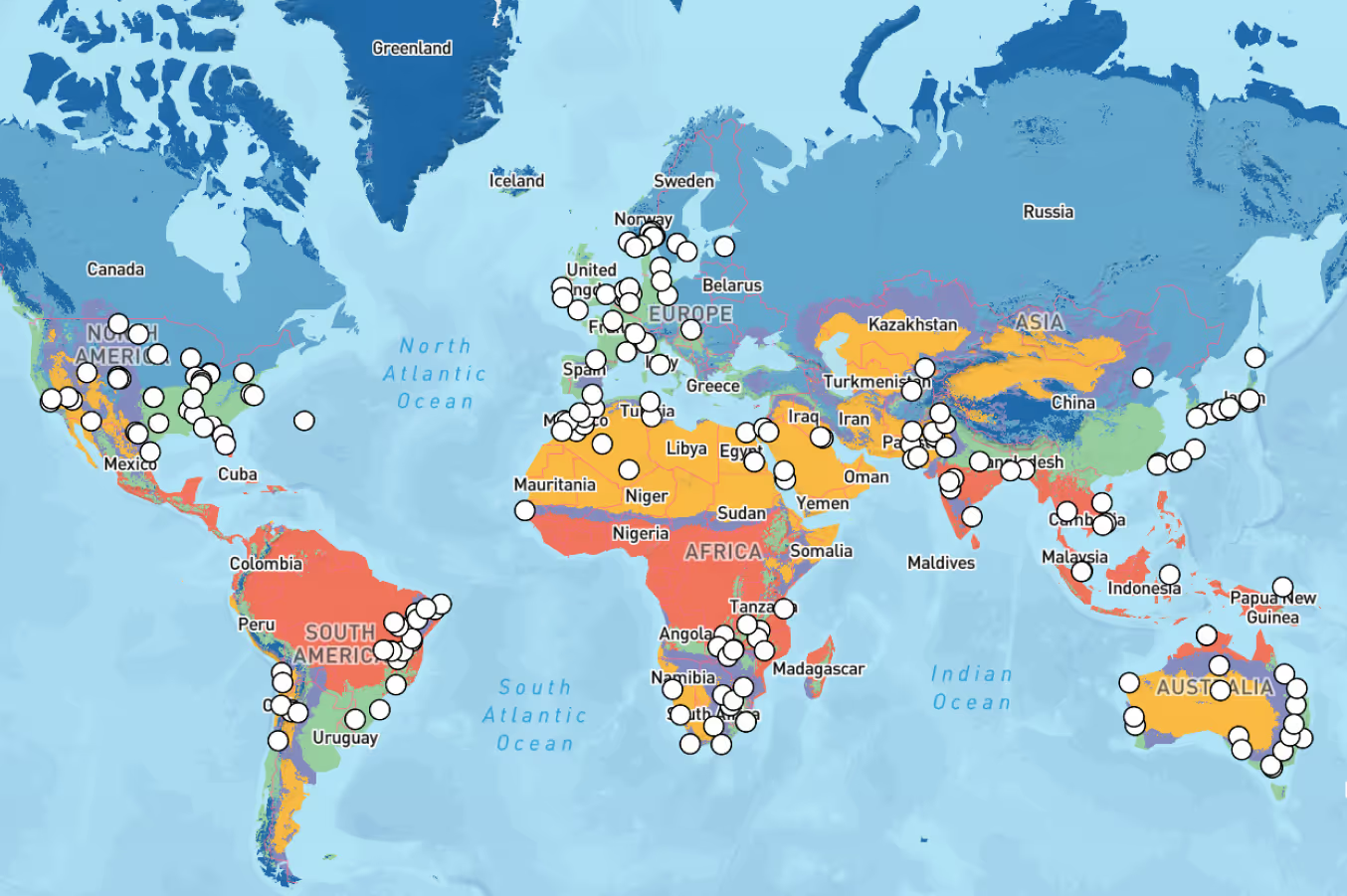

We are very accustomed to accuracy evaluations. Our customers and prospective customers conduct many hundreds of evaluations per year. So often in fact that we have developed detailed guides and internal processes to make evaluations as painless as possible, and to share what we have learnt.

In general, for most customers we actually suggest trying to avoid a “live trial” (constantly collecting forecasts over a period of months), because the level of time and effort required on the customer side is very large. Instead, we suggest beginning with looking at existing analyses and publications. The data from our existing accuracy analyses for relevant nearby weather stations can be shared with customers for analysis. If more evidence is needed, we offer data from our 4-year, global archive of historic forecasts, so customers can test our accuracy at their own sites. We also offer tools in our Python SDK for quickly performing the evaluation against a customer’s own measurements.

We only suggest a “live trial” as a last step, when the earlier steps aren’t sufficient, and when the significant effort and time required is justified.

This trial was indeed a “live trial”, and the team at EPRI and their US Utility partner had the time, expertise and resources to conduct such an exercise, which has spanned 23 months so far from invitation, with the final report still forthcoming. A major undertaking!

.png)

As a team, we found this trial one of the most exciting evaluations we have ever done, because of the open invitation and competition, the robust trial scope, and the fact that entrants could see their results against the (anonymised) competitors every day. The trial was only for sites in the US, but our major competitors are global, and the trial invitation was very open. EPRI gave the different vendors secret names like Ruby and Sapphire. Solcast was named “Pearl”.

It’s very rare to be seeing daily updates of accuracy scores of ourselves VS competitors like this. This made the trial a fun event to watch. It was like we had front-row seats to our own show.

As well as ensuring the optimal configuration of our forecasts, we also set up tools for monitoring our performance and measurement data quality: accuracy dashboards and also a new TV screen in the office, personally installed by our CTO, Alex Jarkey!

.png)

Why and how did Solcast achieve the lowest forecast error?

Nobody at Solcast can take credit for any “new magic algorithm” that achieved this result. Instead, we all take pride in the results of many years of concerted effort.

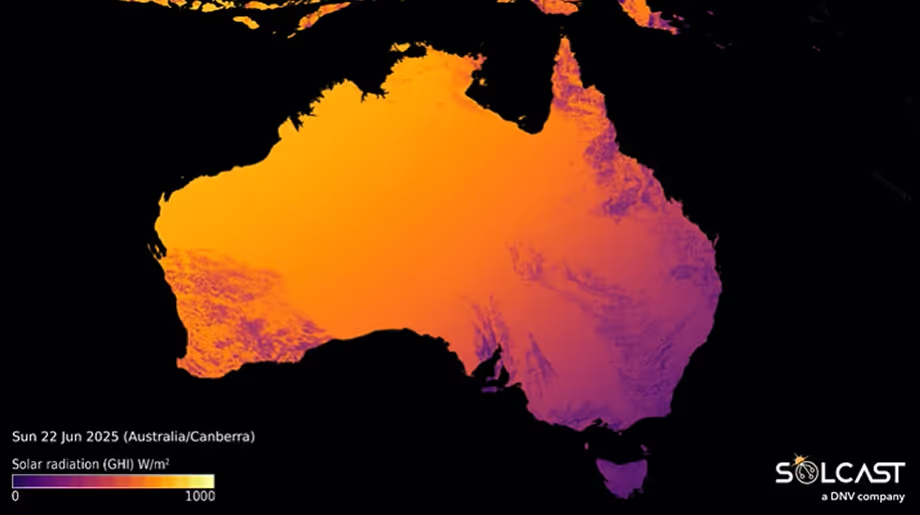

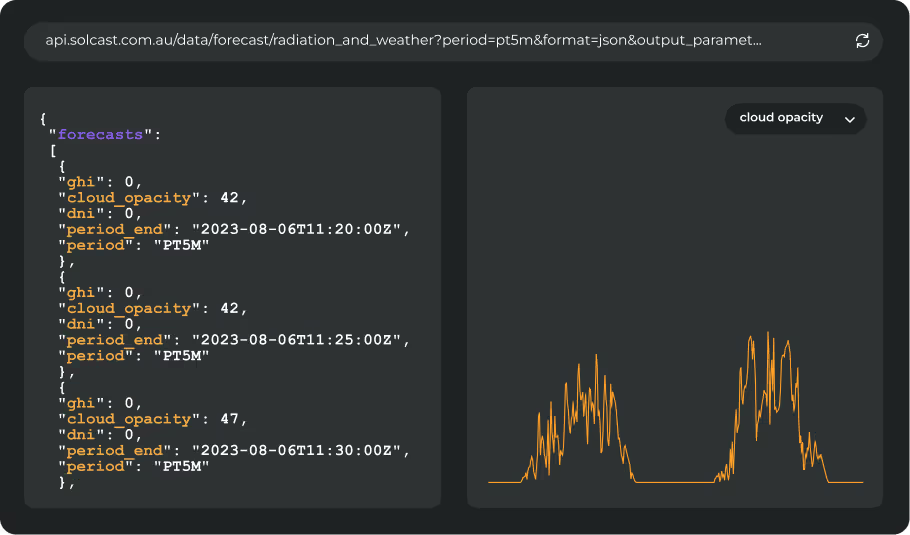

Solcast’s data is made solely for solar irradiance measurement and forecasting. For seven years we have continuously improved our inputs and algorithms that track clouds and weather, and improved our models for handling solar-specific effects like aerosols and soiling. Read more about these “foundational” elements of Solcast’s data here. These are the strong data foundations that make a result like this possible. We call this data our “Radiation and Weather” data, and many customers (especially experts in ML and/or physical solar models) use this data directly.

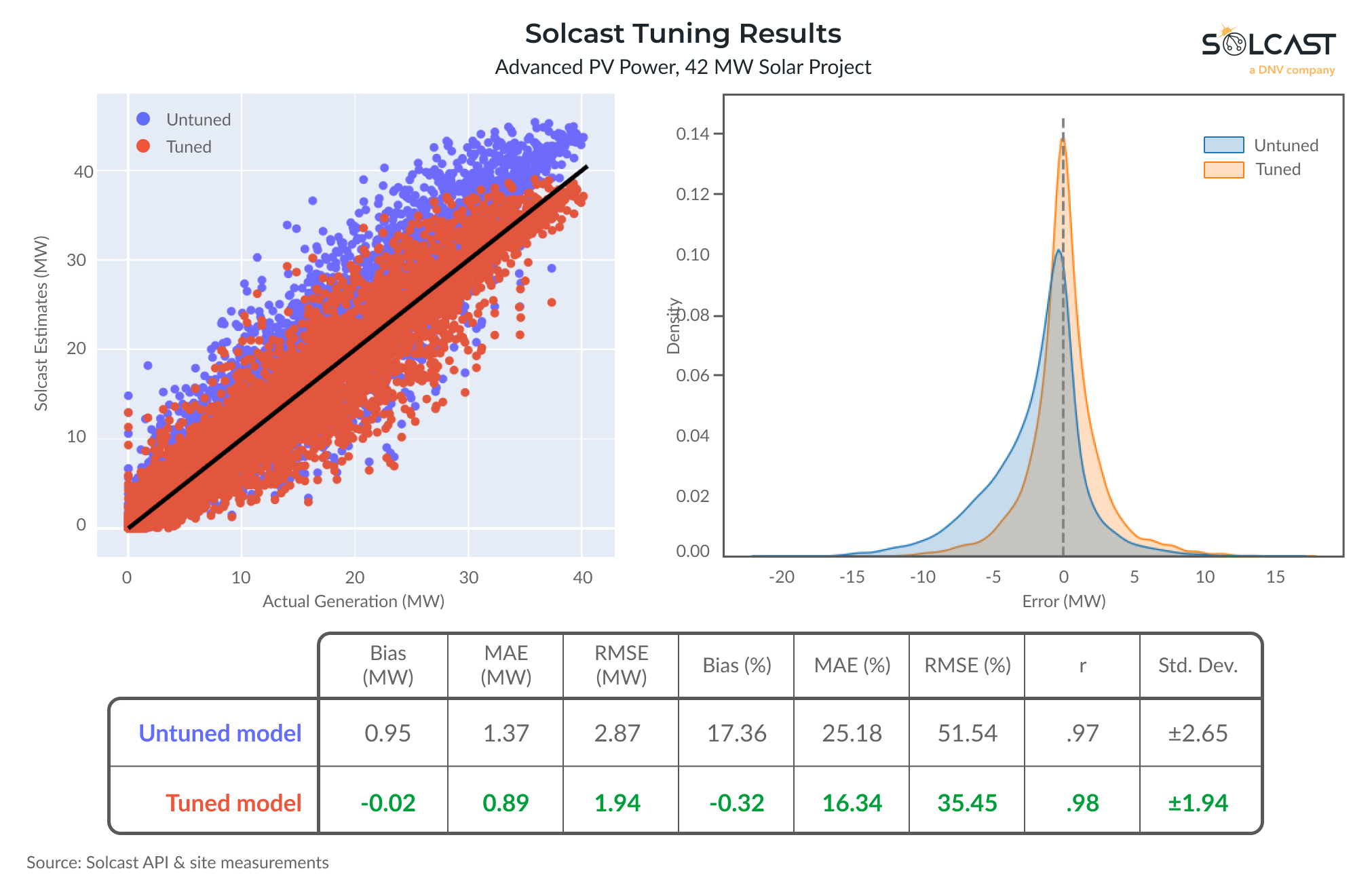

The biggest reason we were able to actually convert these strong data foundations into such a great production forecast result is the “Accuracy Assist” mode of Solcast’s Advanced PV Model.

This is a model that converts irradiance and weather data to PV power, designed specifically for production forecasting for larger solar assets.

Accuracy Assist is the mode selected by customers who want a solar production forecast (MW) of the highest accuracy. In this mode, Solcast not only provides access to the features of the Advanced PV Model, and the radiation and weather data inputs, but we also have our team actually assist in the setup of PV plants in the model. Our team takes a PV power measurement history (ideally the past 12 months) and “tunes” the model to ensure the highest possible accuracy. Read more about how our PV tuning models work in our recent blog.

For this trial, we received locations and basic plant specifications of the trial PV plants such as the AC and DC capacities. We saw a mix of fixed tilt and tracking plants, including some where geometry and technology varied significantly inside the plant. We also received about 12 months of production history from the plants.

This data was all we needed to implement the Accuracy Assist mode.

- The process always begins with a very close, human look at the measurements. If we allow contaminated measurements into the process, such as a period where the plant had a partial outage, the tuning doesn’t work well, and can actually make the resulting forecasts worse.

- Second, we calibrate the Advanced PV Model to the cleaned measurements, by optimising the unknown model parameters.

- Lastly, we use machine learning to find and predict any remaining non-random errors.

Accuracy Assist mode also provides for periodic reviews of newer measurements and forecast performance. For example, seasons change, and vegetation and soiling around the plant changes. Just as we do for our Accuracy Assist customers, a couple of times during this trial we evaluated the more recent measurements and checked to ensure the performance was still optimal.

What did we learn from this forecast accuracy trial?

We took two key learnings from our experience of this trial. We also think there is an important lesson for customers thinking about their own evaluation exercises.

For Solcast, the two key learnings we took were:

- Our intraday forecasts, often called “nowcasts" especially for the next 0-3 hours ahead, still have quite a solid lead on the competition. Our cloud-tracking nowcast technology development began even before Solcast was founded, and we continue to improve it with a range of meteorological and data science techniques.

- Our focussed 2021-2023 improvements in day-ahead forecasts worked. This trial was conducted in a market where day-ahead solar production forecasts have been a big deal for over a decade- so to know we have caught and passed the competition is a good feeling. Progress never stops, however- since this trial we have actually made two more significant improvements! (When you test data from our historical forecast archive, you get data that reflects all the latest improvements)

Finally, for customers.

If you’re thinking about a forecast evaluation exercise, consider carefully before doing a live trial like this one.

Believe it or not, the almost two-year timespan of this trial from first vendor engagement to published results, is actually not that atypical of what we sometimes see with customers trying to perform live trials whilst also needing to continue to operate their businesses.

It’s amazing that EPRI and their utility partner have dedicated their time, expertise and resources to this trial, so that the whole solar industry can benefit. However, we would suggest that rather than trying to replicate, it’s better to use this trial’s results, and other published results. Or to use our extensive historical forecast archive capability, to shortcut your own evaluation, and get started on the results that matter most - your business results!